A Short History of AI

- MIU Blog

- 06 October 2023

Artificial Intelligence (AI) has dominated the tech scene recently, with inventions such as ChatGPT, story-generating AI 4chan green text AI generator, and character development AI Midjourney showcasing the impressive modern capabilities of AI and making it easily accessible to global audiences. The industry is still growing – future AI statistics project that the AI market will grow at an annual rate of 37.3% from 2023 to 2030.

Despite all the hype surrounding AI in the past few years, it is not new. You may only sometimes notice it, but AI and data analytics are integral to many industries and daily life. This article traces AI’s historical development and evolution through the decades and explores how it has impacted society.

CONTENTS

What is AI?

AI refers to computer or machine systems that can complete tasks that would otherwise require human intelligence. Alan Turing was one of the pioneers of ‘intelligent machines’. He expressed this in his revolutionary 1950s paper, Computing Machinery and Intelligence.

Rapid technological advancements from the 1950s till today have enabled data-driven AI to learn and make decisions based on accumulated data and patterns. AI is used to improve speed accuracy and overcome human limitations in performing various tasks.

AI’s Early Origins

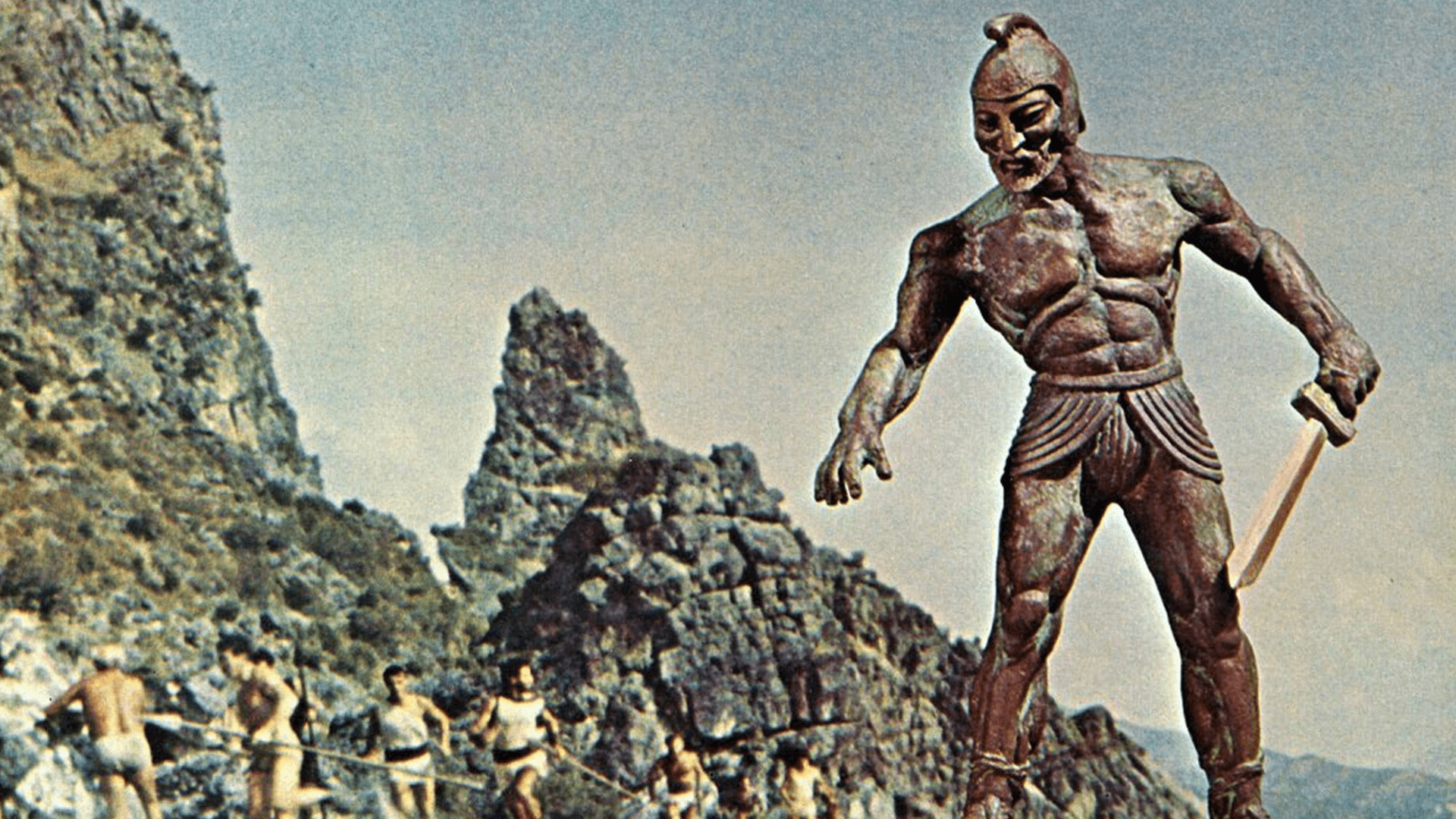

The concept of intelligent machinery dates back to ancient civilisations. Ancient Greek epics by Homer mention ships and tools that independently perform tasks ranging from monotonous, repetitive work to heeding the commands of human masters. Human fascination with artificial intelligence has revolved around making tasks more efficient and delegating menial work to subservient ‘artificial slaves’.

Numerous ancient civilisations, including China and the Latin Christian West, have explored the concept of automatons and mechanical inventions. Most of these portrayals involved automatic warriors, sentries, or guards protecting sacred tombs, relics, and places of significance.

Later, the term ‘robot’ was coined by Karel Čapek in his 1920 play R.U.R (Rossum’s Universal Robots). The play depicted the story of a young and intelligent scientist who wanted to create an artificial man.

Most of these early conceptual and practical expressions explored the boundaries between living and non-living intelligence.

The Emergence of AI as a Field of Study

Dartmouth Conference

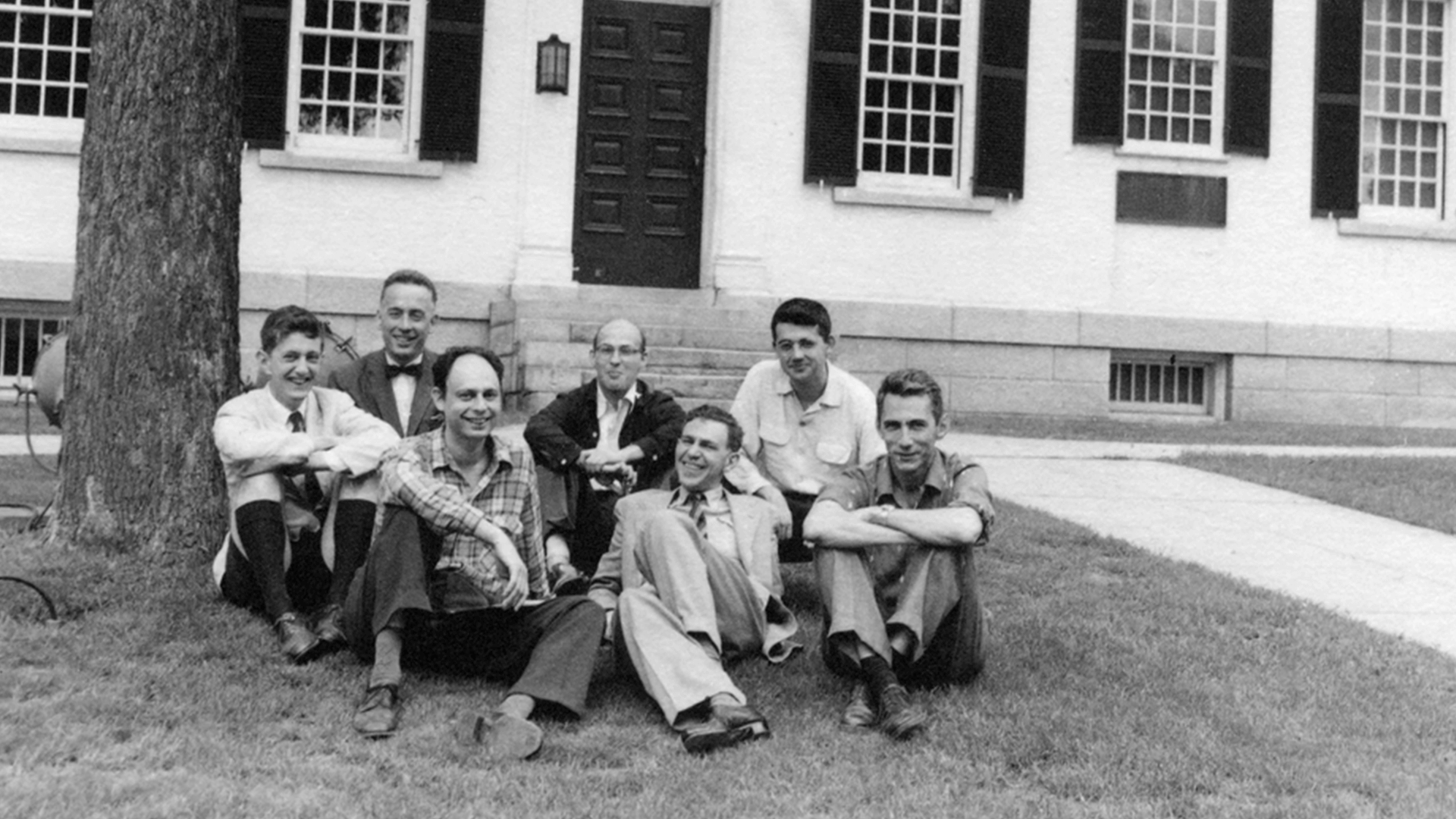

AI was formalised as a burgeoning scientific field and academic discipline in 1950. The first-ever AI conference, the Dartmouth Conference, occurred in 1956 at Dartmouth College in the USA.

This landmark conference is regarded as the birthplace and founding moment of AI.

”Artificial intelligence” was coined as a term on August 31, 1955, in a research project proposal submitted by ‘the big four’ researchers: John McCarthy from Dartmouth College, Marvin Minsky from Harvard University, Nathaniel Rochester from IBM (International Business Machines), and Claude Shannon from Bell Telephone Laboratories. This comprehensive workshop was the basis of the 1956 Dartmouth Conference and discussed many prevailing problems in the field of AI at that time.

Alan Turing

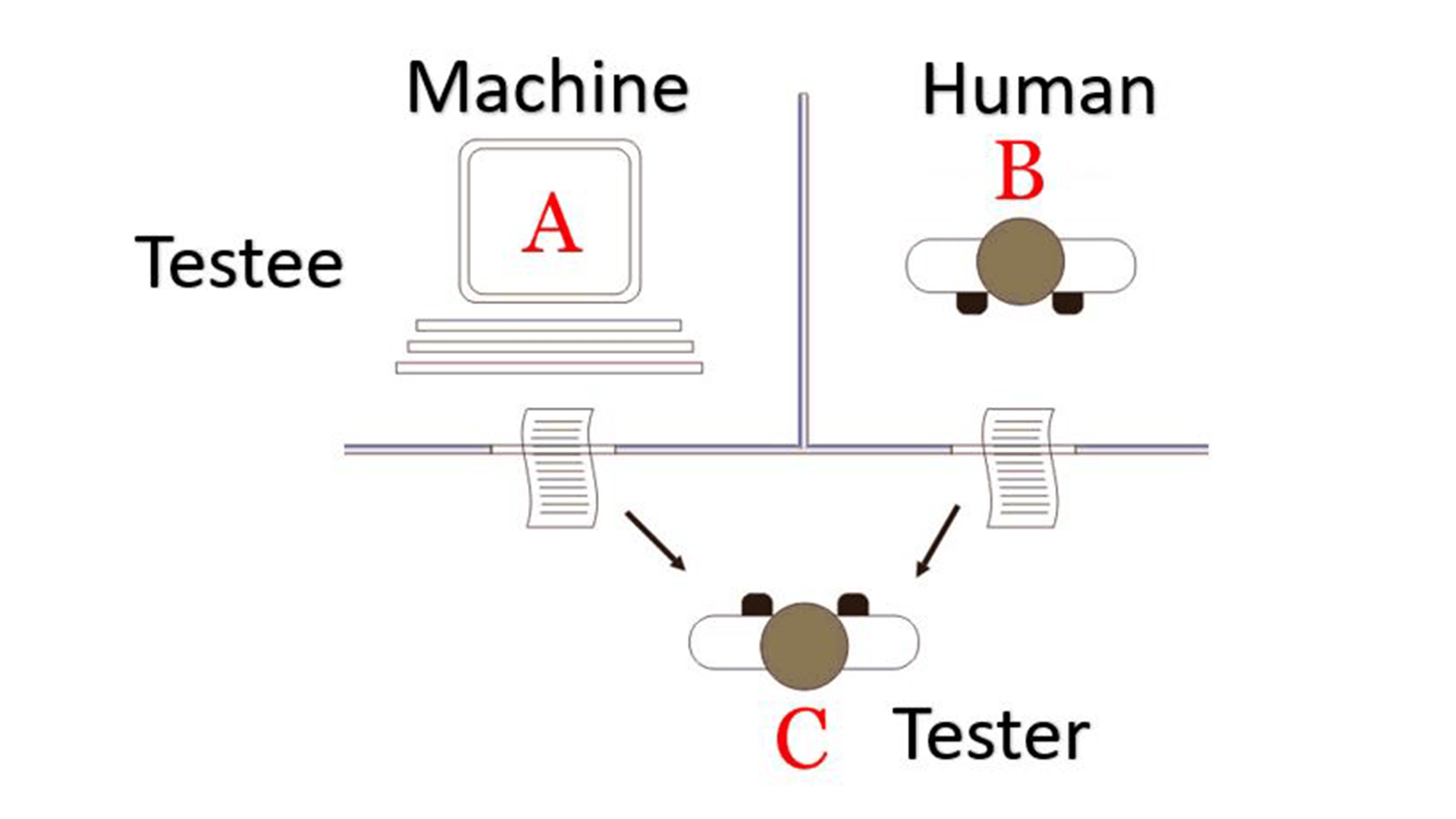

Another prominent researcher in the field of AI was British mathematician and computer scientist Alan Turing. He created the Turing Machine, an ideal computing device that could store memory, receive input, and produce output. This computing concept formed the fundamental principles for modern-day problem-solving in programming.

Later on, Alan Turing created the famous Turing Test in his 1950s research paper, Computing Machinery and Intelligence. This test investigated if a hypothetical examiner could distinguish between a computer’s and a human’s answers. The computer would be considered intelligent if they could not tell within a specified number of test runs. The Turing Test is still highly regarded by many experts as a benchmark for intelligence testing.

AI in Science Fiction and Pop Culture

AI became popular through media like science fiction literature, movies, and television shows. Some of the most popular science fiction works exploring artificial sentience and its impact on humanity are Mary Shelley’s Frankenstein (1818) and HAL9000 from 2001: A Space Odyssey (1968) by Stanley Kubrick. Other popular portrayals include R2-D2 and C-3PO from Star Wars, Agent Smith from The Matrix, artificial human replicants from Ridley Scott’s 1982 Blade Runner – based on the novel ‘Do Androids Dream of Electric Sheep?’ (1968) by Phillip K. Dick, and the T-800 from the Terminator movies.

AI: Friend or Foe?

Media portrayals of artificial intelligence include the benign and terrifying. Positive characterisations include ‘droids’ like R2-D2 and C-3PO, which exist to serve and help their human owners. Other characters, like HAL9000 and T-800, are written as vicious and cold-blooded killing machines with no independent sense of morality.

1960s-1970s: A Cyberpunk Dystopia

Science fiction has long explored concerns about artificial intelligence and the future of humans. Fictional tropes such as ”dystopian” and ”cyberpunk” arose from the new wave science fiction trend of the 1960s and 1970s, which popularised moody depictions of dark corporate hellscapes lit up in rainbow-coloured neon, plagued by socio-economic inequality, dangerous new technology, and ecological collapse. These were portrayals of future human societies altered beyond recognition by the insistent and deadly forward march of technology and capitalism.

Modern Pop Culture: Will AI Take Over Humanity?

We are now several decades past those early science fiction movements, but socio-cultural anxieties about AI have not disappeared completely.

A thread of unease has always run through AI discourse in pop culture. Recent productions, for example, singer-songwriter Grimes’s ‘We Appreciate Power’ (2018), showcases a nihilistic, dystopian view of the future where humans ‘pledge allegiance to the world’s most powerful computer’.

The ominous lyrics are whimsically creepy, with distorted instrumentation that recalls the dark and delightful phantasmagoria of the new-wave cyberpunk aesthetic. The overall effect is sinister yet thrilling – similar to society’s attitude towards AI today.

Early AI Applications

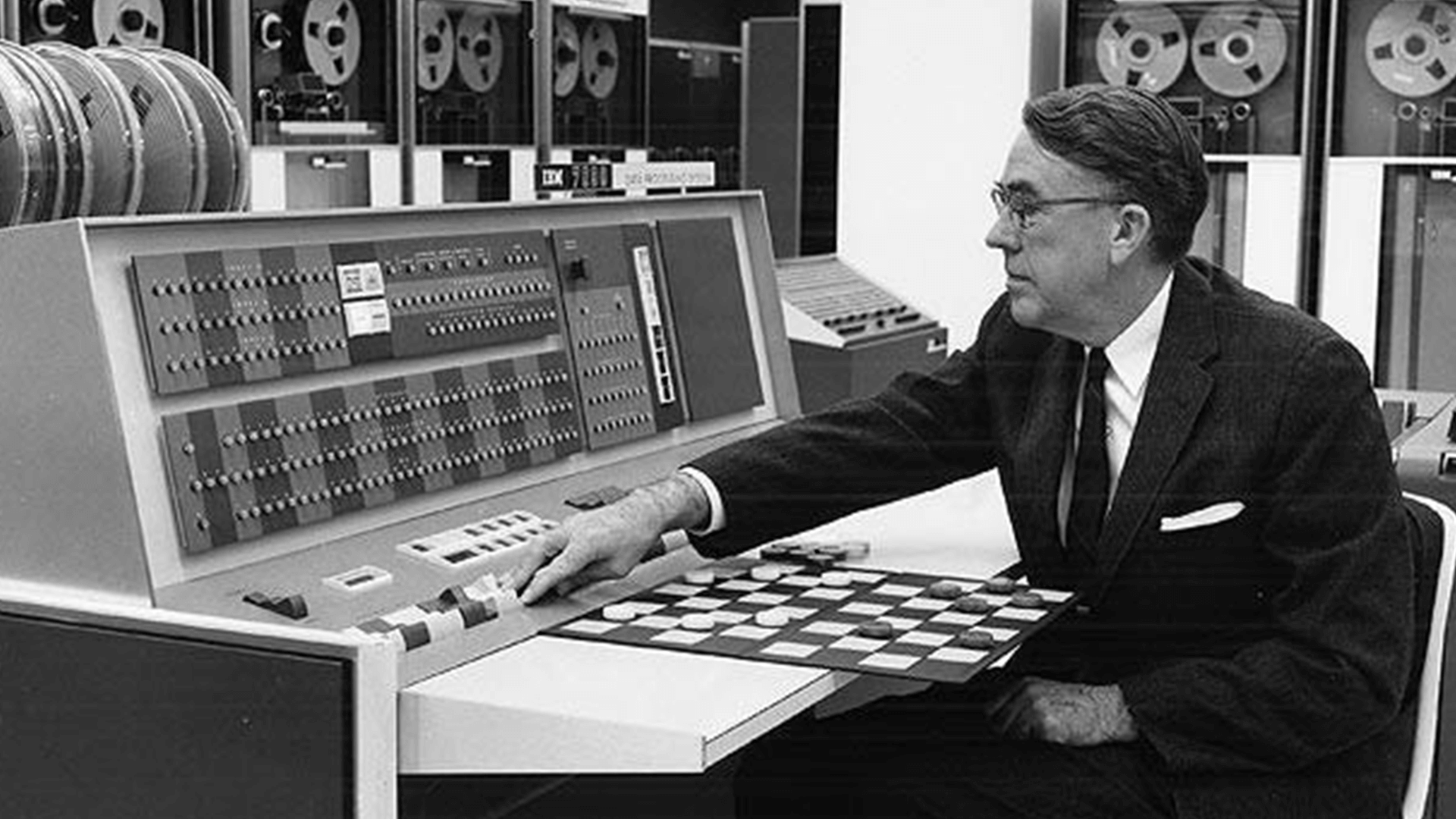

Early applications of AI were made possible after the invention of digital computers in the late 1940s-1950s. In 1946, Arthur Lee Samuel, an electrical engineering instructor from MIT, pioneered the first self-learning checkers programme on the IBM 701. It was called the Samuel Checkers-Playing programme.

AI researchers were simultaneously developing other notable projects at this time. In 1966, Joseph Weizenbaum, a German computer scientist, created ELIZA.

ELIZA was an artificial psychotherapist that used basic natural language processing (NLP) to communicate with the human user.

In the 1980s, a research team from Carnegie Mellon University started developing DeepThought, an AI chess programme. It lost to Garry Kasparov in 1989, the reigning global chess grandmaster.

IBM later used the DeepThought algorithm to create DeepThought’s successor, DeepBlue, which famously won one of six matches against Garry Kasparov in 1996. It was a breakthrough for AI and a milestone in chess and computing. It meant that computers with AI could use human intelligence to make decisions.

AI Winter and Renaissance

An ‘AI Winter’’ occurred from 1974 to 1980 due to a slowdown in the progression of AI capabilities. The AI Winter occurred partly due to Sir James Lighthill’s 1973 report, the Lighthill Report, which criticised AI research for failing to meet its ambitious objectives. The UK government subsequently cut funding for AI research.

AI Resurgence

AI experienced a resurgence in 1983, which is growing every passing day. Researchers and developers began to focus on machine learning and data analytics in AI. They trained machines to learn from vast reserves of available data and improve upon previous experiences. The capabilities of computer GPU hardware also improved to keep up with processing large amounts of data used for machine learning.

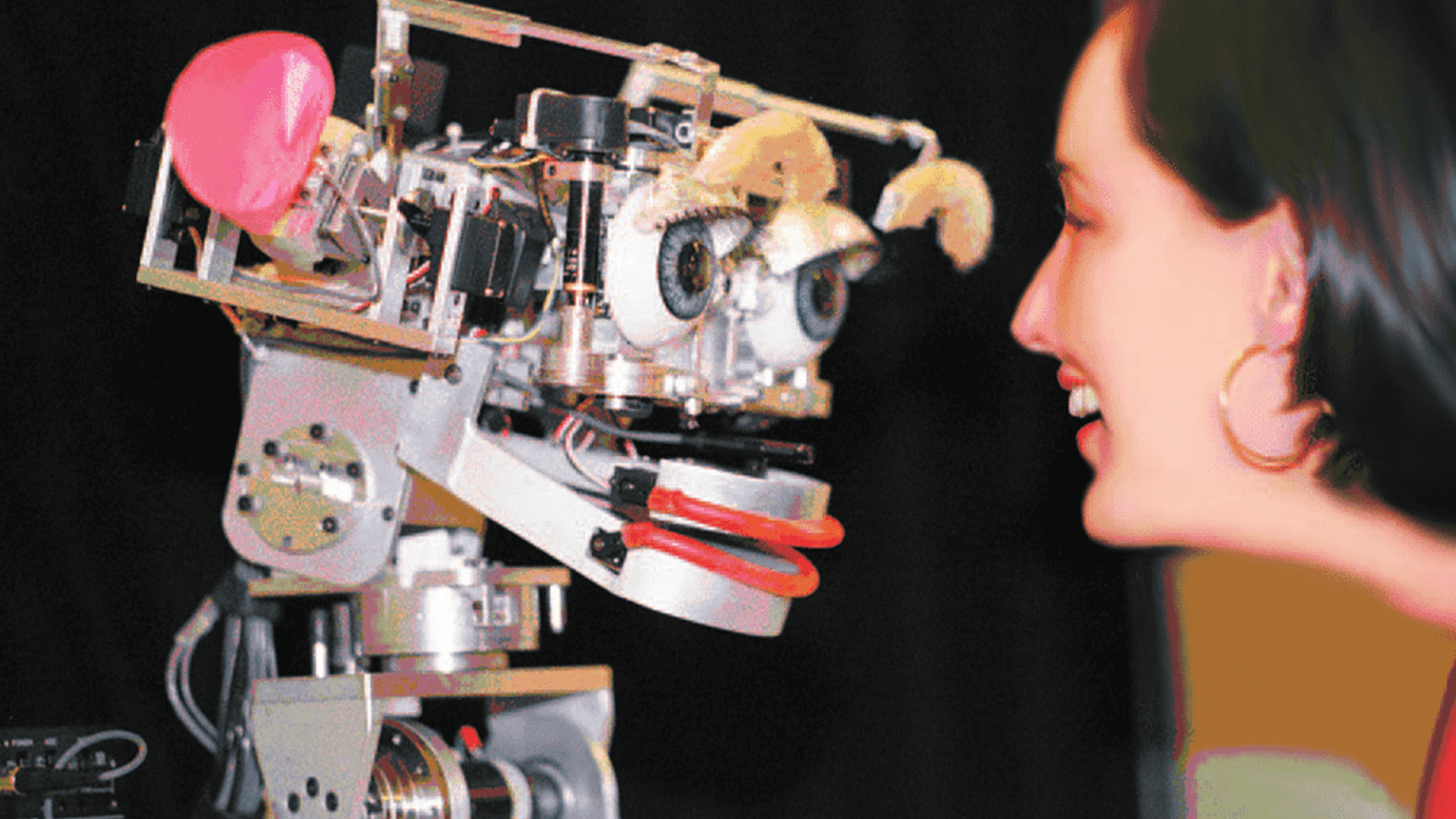

As a result, AI technology made numerous advancements in speech recognition and natural language processing. This rapid development led to breakthroughs like the 1996 DeepBlue chess match and Dr. Cynthia Breazeal’s KISMET.

KISMET was a social interaction robot that developed its reactions by perceiving the emotional responses of humans around it.

How Artificial Intelligence Will Change the Future

Today, AI is a part of our everyday lives. Computer memory storage, computing power, and processing speeds have significantly improved over the last two decades. The use of big data has enabled AI to learn from analysing vast amounts of statistics. From engineering to entertainment, automation and AI-powered analytics tools power our economy and society. Automated analytics software helps with data entry, processing, and analysis in banking and finance. The future of data-centric AI will see more autonomous AI-driven data analytics programs capable of conducting complex studies.

AI is becoming more people-oriented as it adapts to human speech patterns. Chatbots use natural language processing (NLP) to have realistic and informative conversations with human users.

The End of Human Creativity?

Creative AI tools like ChatGPT, Bard, DALL·E, and Midjourney help generate text and images.

Does this mean that AI spells trouble for creatives?

Not necessarily. Creatives can use creative AI generation tools to streamline their work and enhance creativity. Furthermore, AI cannot interpret meaning and generates content based on what already exists. Human creativity offers new perspectives and interpretations in addition to existing ideas.

How will AI Change the Future of Work?

The role of artificial intelligence in future technology will become more dominant as many industries adopt AI. Some examples:

- Automation expedites routine tasks in many industries.

- Transform customer experience in retail using Alternative Reality (AR) and Virtual Reality (VR).

- Transform the game and media industry using AR and VR.

- AI that predicts the future in finance and STEM industries using patterns from historical data analysis and data mining.

- Generative AI will help with content creation in creative industries.

- Smart Assistants for mobile and web applications in education, transport, navigation, and social media.

Inventions like autonomous vehicles or surveillance robots are no longer ideas or science fiction.

Ethical Concerns

The development of AI has inevitably raised ethical questions about its usage. In 2023, one of the most significant ethical questions regarding AI was whether OpenAI’s inventions – ChatGPT and DALL·E – threatened the value of creativity that we previously thought distinguished the human race from robots.

Ethicists are also concerned about using generative AI tools for nefarious means, such as creating deep fakes to defame or blackmail people. As AI becomes more accessible to the general population, we must carefully consider the moral implications of its widespread usage.

Conclusion

AI has come a long way since the early conceptual discussions and research of the 1920s -1950s. As discussed, the role of artificial intelligence in future technology will be highly autonomous, creative, and analytical. AI can potentially transform work and daily life radically, but we must also remember that it is a tool that people should use responsibly to better human society.

recommended

3 Benefits of Marketing Automation Events

- Digital Marketing

- 24 April 2024

2 Iconic Features of The Popular Wes Anderson Style

- Branding

- 29 September 2023

Build Your Digital Presence for Free using Instagram Marketing

- Branding

- 19 September 2023

Branding

Branding Digital Strategy

Digital Strategy PR & Communications

PR & Communications